2 min read

Google Research expert explains AI doesn’t always require private data at HLTH 2023

Abby Grifno

October 12, 2023

In a recent talk at HLTH 2023, James Manyika breaks down a common misconception among those exploring AI.

AI in the medical field

As AI has boomed in many fields in recent years, it's also steadily advancing healthcare technology. AI offers significant potential, and Google Research has begun taking full advantage.

Despite the possibilities, many critique AI's potential risks, especially concerning patient privacy.

The Future Healthcare Journal chronicled the possibilities of AI in healthcare, noting that it would likely be used to assist in diagnosis and treatment recommendations, as well as administrative activities.

The journal also noted the considerable challenges proponents of AI face, particularly issues related to transparency and privacy. As an emerging technology, AI can be difficult to understand. In the world of healthcare, it may have the potential to diagnose patients but may not be able to explain why the diagnosis was reached. Furthermore, many are concerned that, through collection and analysis, personal data may be released without patient consent.

Even though there are challenges with AI, there are many opportunities, and Google Research is confident they can resolve concerns and reach life-saving breakthroughs.

New projects, more security

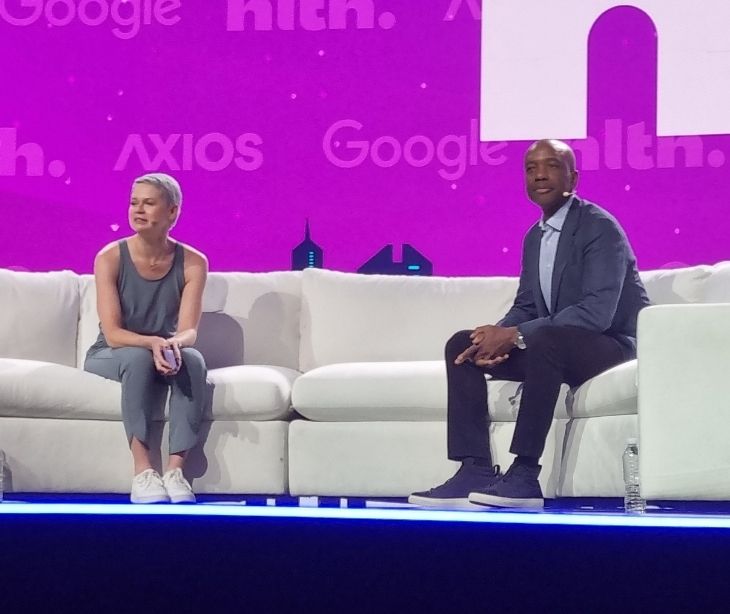

HLTH 2023 featured a Main Stage talk, "Bringing AI to Healthcare Responsibly," led by James Manyika of Google. Manyika, who serves as Senior Vice President of Technology & Society and oversees Research at Google, is working on breakthroughs in AI, machine learning, algorithms, and more.

Related: Day 2 at HLTH 2023: Inspirational stories and insightful discussions.

In his talk, Manyika was interviewed by Axios journalist Erin Brodwin and took time to dispel what he believes is a common misperception. "This presumption, particularly in the age of AI, that all of [Google's advancements] require personal data, I think, is mistaken."

Manyika went on to explain that the majority of his projects with Google Research aid in healthcare without infringing on private data.

His current projects focus on taking large amounts of data and sorting it to find patterns. One of Google Research's projects, for instance, focuses on looking at mammography scans.

Another project is focused on screening patients for cancer. Manyaki explains that doctors use a process called "contouring," where doctors carefully examine CT scans to see what cells are cancerous or could be affected, which is used to outline a treatment plan.

According to Manyaki, the process takes around 7 hours, using the doctor's valuable time and creating longer wait times for patients seeking critical information. While it can take nearly a full workday for a doctor, AI can complete the same task in minutes.

Regarding these projects, Manyaki said, "There are a lot of applications in AI that don't make this presumption that you need somebody's private data."

The big picture

With significant and relevant concerns regarding the use of AI in the medical field, Manyaki shows that AI can still be productive without infringing on individual privacy.

As we continue learning more about the possibilities of AI, healthcare organizations should take note of how they can utilize artificial intelligence to care for patients while maintaining privacy and confidentiality.

Subscribe to Paubox Weekly

Every Friday we bring you the most important news from Paubox. Our aim is to make you smarter, faster.