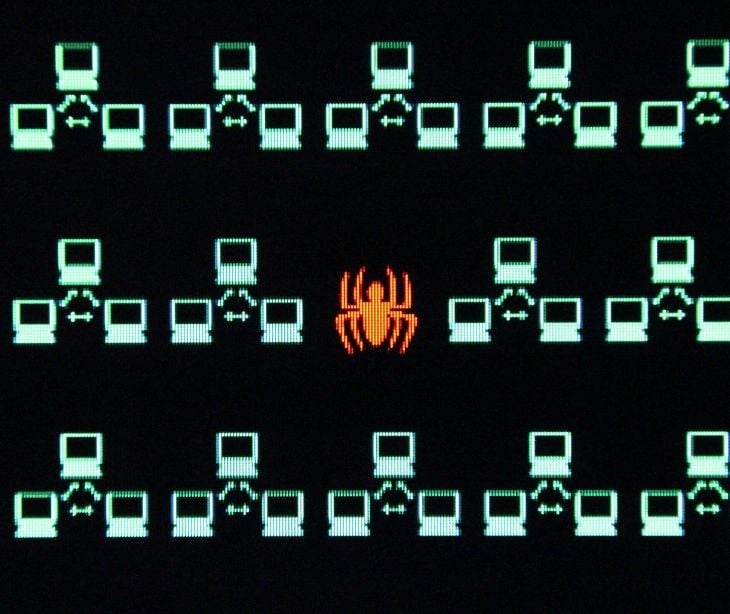

Spidering, also known as web crawling or web scraping, is the process of automatically extracting information from websites using web crawlers or spiders. A web crawler is a bot or automated script that systematically navigates through a website's pages, following links and gathering data. The collected data can include text, images, URLs, and other relevant information.

Search engines, for example, use web crawlers to index the content of websites so that users can find relevant information when conducting searches. Web scraping is also employed for various purposes, such as data mining, competitive analysis, and content aggregation.

How spidering works

- Initiating the spider: The process begins with deploying a web crawler, a script, or a bot programmed to navigate the web. Search engines like Google use sophisticated algorithms to determine which pages to crawl and how frequently.

- URL queue: The crawler starts with a list of seed URLs, which act as the initial entry points. As it visits a webpage, it extracts links to other pages, creating a queue of URLs to be visited.

- Data extraction: Upon reaching a webpage, the spider extracts relevant data according to its programming. This can include text, images, URLs, and other information specified by the user or the purpose of the crawling.

- Link following: The crawler follows links on the current page, adding new URLs to the queue for future exploration. This process continues recursively, allowing the spider to traverse the vast expanse of the internet.

See also:

Significance of spidering

Search engine indexing

Search engines employ web crawlers to index the content of websites. This indexing facilitates quick and accurate retrieval of information when users perform searches, making the web more accessible and user-friendly.

Data mining

Spidering is a powerful tool for data mining, enabling businesses and researchers to gather valuable insights from diverse sources on the internet. This information can be used for market research, competitive analysis, and trend monitoring.

Content aggregation

Many websites use spidering to aggregate content from various sources. In fact, a 2022 report by Imperva found that automated bots, which include web crawlers and scrapers, accounted for 42.3% of all internet traffic. These bots are often used by websites to index, scrape, and aggregate content from different online sources efficiently.

Types of spidering

Spidering, or web crawling, can take on various forms and serve different purposes depending on the goals of the entity deploying the web crawler. Here are several types of spidering:

- General purpose crawlers: These web crawlers are designed to systematically navigate the web and index content for search engines.

- Focused crawlers: Focused crawlers are targeted towards specific topics or themes. Instead of crawling the entire web, they concentrate on a predefined set of websites or content types.

- Incremental crawlers: Incremental crawlers focus on retrieving updated content since a previous crawl.

- Deep web crawlers: Deep web crawlers are designed to explore and index content that is not easily accessible through traditional search engines.

- Vertical crawlers: Vertical crawlers are tailored for specific industries or verticals - vertical crawlers focused on the healthcare industry would target health-related websites, forums, and databases.

- Scraping crawlers: Scraping crawlers, or scrapers, extract specific information from websites, such as product prices, reviews, or contact details. These crawlers are often used for data mining, market research, and competitive analysis.

- Social media crawlers: With the proliferation of social media, crawlers are employed to index and retrieve content from platforms like Facebook, Twitter, and Instagram. They help users find relevant posts, profiles, and hashtags.

- News crawlers: News aggregators and media outlets employ news crawlers to collect headlines, articles, and updates from diverse news sources.

- Real-time crawlers: Real-time crawlers focus on retrieving the latest and most up-to-date information available on the web.

- Enterprise crawlers: Enterprise-level web crawlers are employed within organizations to index and retrieve information from internal databases, documents, and intranet sites, facilitating better organization and accessibility of internal data.

- Link checkers: Link-checking crawlers analyze websites for broken links, ensuring a smoother user experience to help maintain the health and integrity of their websites.

- Security crawlers: Security-focused crawlers are designed to identify vulnerabilities in websites and web applications by simulating cyberattacks and analyzing the security posture of web assets.

Spidering best practices

Spidering, or web crawling, comes with responsibilities to ensure ethical, legal, and efficient data extraction. Here are some best practices for spidering:

- Respect Robots.txt: Follow the rules specified in a website's robots.txt file. This file provides guidelines on which parts of a site are open to crawling and which are off-limits. Ignoring robots.txt can lead to legal issues and is considered unethical.

- Crawl delay: Implement crawl delays to avoid placing an undue load on a website's server.

- User-agent identification: Identify your web crawler with a unique and descriptive user-agent header. This allows website administrators to recognize your crawler and contact you if any issues arise.

- Politeness and respect: Avoid aggressive crawling behavior that could overwhelm a website's server. Space out requests and prioritize the extraction of relevant data.

- Session handling: If required, implement session handling to navigate websites that require authentication.

- Data usage compliance: Be aware of legal and ethical considerations related to data usage.

- Limit parallel requests: Restrict the number of simultaneous requests to a server to avoid overloading it.

- Update frequency: Adjust the frequency of your crawling activities based on the update frequency of the target website.

- Handle redirects: Implement proper handling of redirects to ensure that your crawler follows them correctly.

- Monitoring and logging: Set up monitoring and logging mechanisms to keep track of your crawler's activities. Regularly review logs for errors, warnings, and to ensure that the crawler is operating as intended.

- Testing and development: Ensure that your crawler is efficient, respects guidelines, and produces accurate and reliable results.

- Obey rate limits: Respect these limits to avoid being blocked and maintain a positive relationship with the target website.

- Follow metadata guidelines: Pay attention to metadata such as last-modified headers and use them to determine whether a page has been updated since your last crawl.

See also:

Ethical considerations

While spidering provides numerous benefits, it's important to approach it ethically and responsibly. Some websites explicitly prohibit web scraping in their terms of use, and unauthorized spidering may lead to legal consequences. You must respect the rights of website owners and adhere to legal and ethical guidelines when extracting data from the web.

FAQ’s

What is the purpose of spidering?

Web search engines and some other websites use web crawling or spidering software to update their web content or indices of other sites' web content.

Are web crawlers legal?

If you're doing web crawling for your own purposes, then it is legal as it falls under the fair use doctrine, such as market research and academic research. The complications start if you want to use scraped data for others, especially commercial purposes.

How can spidering be used to discover website vulnerabilities?

Web crawling, when used with a security-focused approach, can be employed to discover potential vulnerabilities in websites. Security professionals and ethical hackers use web crawlers to identify weaknesses in websites, helping organizations enhance their security posture.

See also: Using AI in patient data analysis

Subscribe to Paubox Weekly

Every Friday we bring you the most important news from Paubox. Our aim is to make you smarter, faster.