We messed up yesterday.

An errant script by one of our customers created a mail loop that brought our encrypted email platform to its knees. While it’s certainly not the first time a customer has created a mail loop, this one in particular included a 5MB attachment. That attachment was sent tens of thousands of times, over and over again, all within a tight timeline.

The service interruption was isolated to Paubox Encrypted Email customers. Customers using Paubox Marketing, Paubox Email API, and Inbound Security (part of Paubox Email Suite Plus & Premium) were not affected.

To be clear, yesterday’s service interruption was not caused by a breach or our systems being hacked.

Here’s what happened

At 6:44 AM PST, several instances (i.e. servers) we use for Paubox Encrypted Email sent off internal alarms of being dangerously low on disk space.

The aforementioned 5MB mail loop was exponentially growing in our mail queues, which reside on hard drives in the cloud. After each round of upsizing disk space for our encrypted email cluster, they would fill up again within 30 minutes of each upsize.

Normally when an email is sent, it and any attachments get removed from the mail server’s queue, thus freeing up disk space. During yesterday’s mail loop however, our mail queues kept filling up, unable to keep up with the mail loop.

Approximately four hours after our internal alarms started going off, I got in touch with the customer who had mistakenly created the mail loop.

After confirming it was in fact a mail loop on their end, we removed all existing mail loop messages from our mail queues. We then massively upsized CPU and RAM for our entire mail cluster so that the backlog would get processed faster.

While many emails were delayed by hours yesterday, we do not have any reports of emails that never arrived at their destination.

Whose fault was it?

As Founder CEO of Paubox, I take full responsibility for this.

Our ambition is to become the market leader for HIPAA compliant email. As such, our customers must trust and depend on Paubox to encrypt and deliver their email.

This is a big deal to me. I apologize for the stress and problems we caused our customers yesterday.

What are we doing about it?

Here’s what we’re doing about yesterday’s mess.

Scaling infrastructure. First, we must continue to scale our email infrastructure. This involves more machines, faster machines, and better methods of automatically detecting and stopping mail loops. We will be looking at Email AI for this.

Free Phone Support. We have struggled for years on what to do about phone support. On one hand, customers really enjoy speaking to a person when things go wrong or they get stuck.

On the other, a bad actor can call Support, impersonate a customer, and gain access to highly sensitive data. How do we know who we’re speaking to is indeed a customer? This gets especially tricky as the customer gets larger.

Starting Monday however, we will have someone dedicated to answering the phone for Support during business hours (8AM – 5PM PST, Monday – Friday). It will be free for paying customers.

We will need to hire more staff to do this properly, which we will. We’ll also need to figure out a method for identifying customers, which we will.

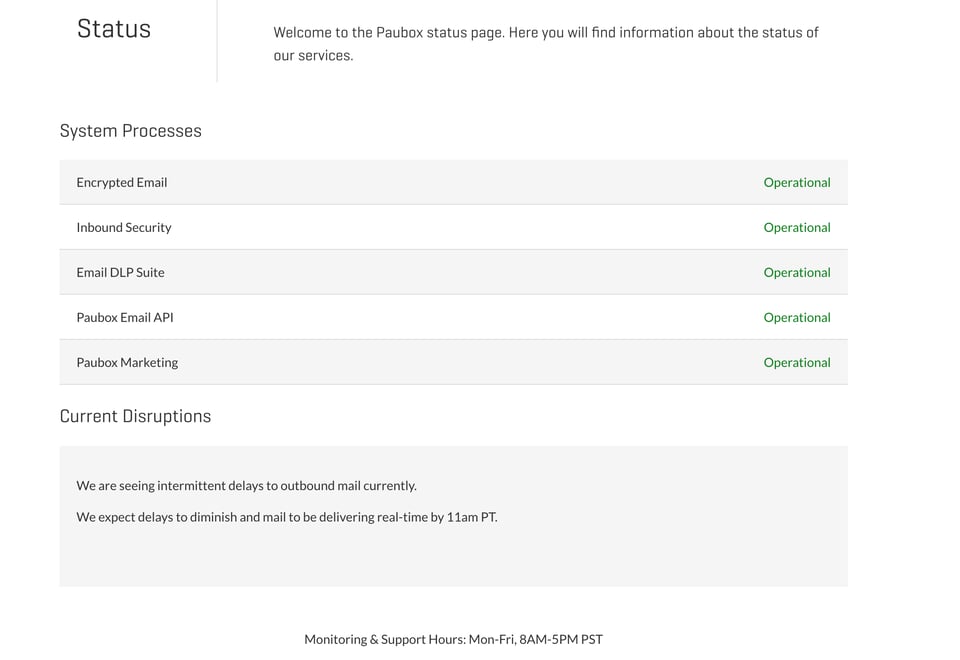

New System Status page. Our System Status page did not serve us well yesterday.

Here’s a screenshot I took when things were hitting the fan:

Encrypted Email says it’s Operational and yet down below, the info is entirely different:

“We are seeing intermittent delays to outbound mail currently.”

Starting next week, we will be using a new vendor for our System Status update page.

See Related: Our New System Status Page

Mahalo,

Hoala Greevy

Founder CEO

Paubox, Inc.

Hoala Greevy

Hoala Greevy